Flow:

Set up Neurogrid

- Connect FPGA to leaf of 9

- Spoof FPGA as chip 15

- change xml config file so 15 is at leaf of 9

- comment out chip 17 in xml config file route (17 is normal FPGA)

- In bias-ZIF-6k.xml, change chip_15 route_to to match xml config file

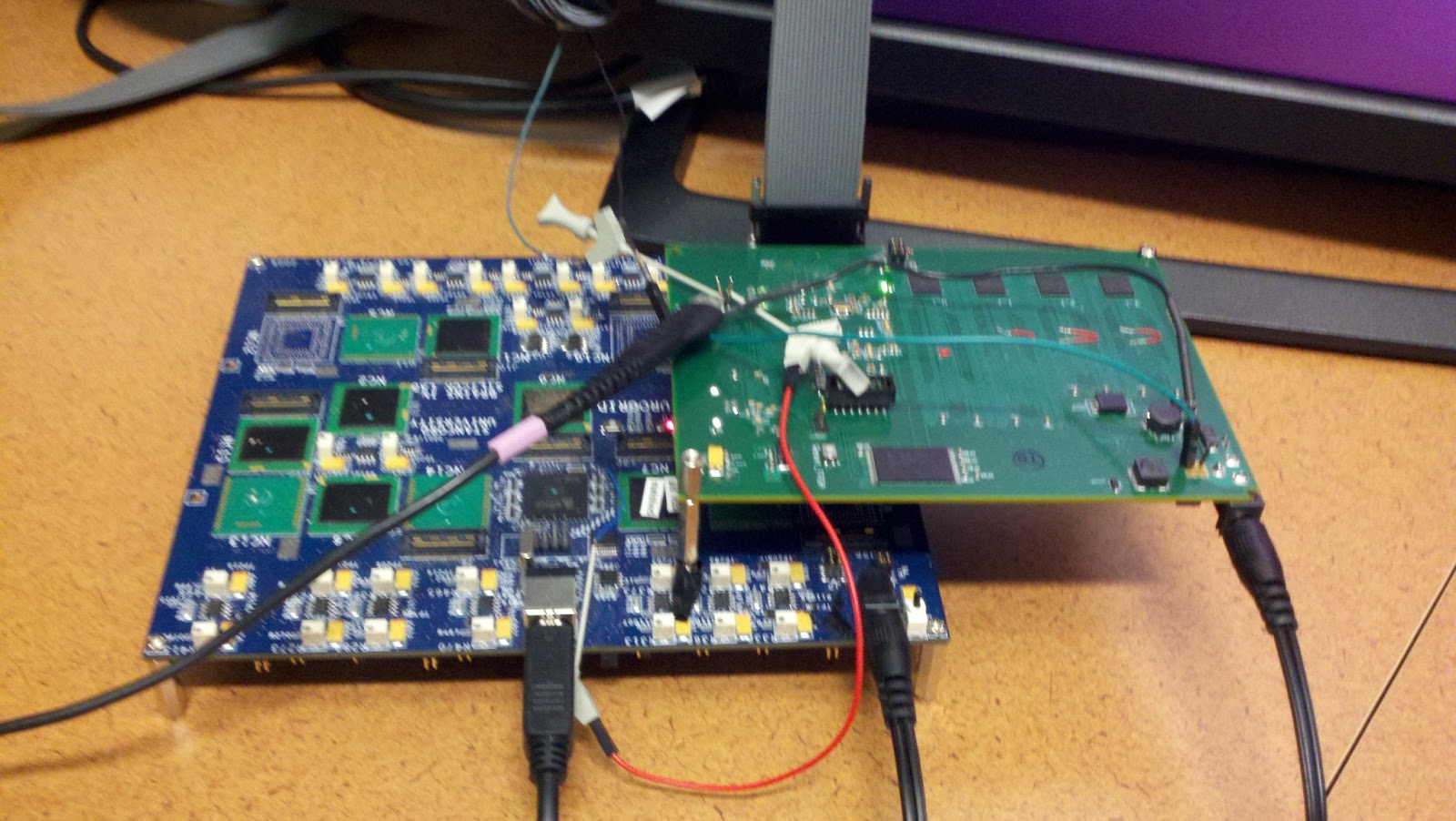

- Connect as shown

- Connect FPGA reset pin to Neurogrid reset

- Connect FPGA acknowledge pin to oscilloscope and logic analyzer

Set up Logic Analyzer

- load Stimulus\System1.tla configuration file

- go to setup > probes tab

- look for signals on channel 7

- Probes C3 and C2 should be all triggering except first channel on C3

- Start spring gui, and if software doesnt see sufficient signal, gui will time out.

- The board light will trip. This is normal for this experiment

Set up Software

In neuro-boa/apps/loopback_test/

In neuro-boa/apps/loopback_test/

- Edit loopback_parameters.py

- python loopback_parameters.py

- python generate_spikes.py

Run experiment

- In spring, run NEF/loopback_test/loopback_test.py

- Right before experiment starts (when terminal shows "Update called to replace child:" , run logic analyzer

- When logic analyzer stops, File > export data

- Transfer logic analyzer data to desktop

- Use loopback_test/ReadLAdataAllGroupsMultipleFilesStimulus.nb Mathematica script to parse logic analyzer data

- change input file names

- change output file names

- Use loopback_test/cleanLogicData.sh to clean the spike data

- change input file names

- python loopback_test/analyze_loopback.py to analyze the spike data